‘Set and forget’ machine learning delivers NASA prize-winning space innovation

If you send a robot to the Moon, you’ve got to be sure it can do its job without constant human supervision.

That’s why AIML’s expertise in coding, machine learning and robotic vision played a vital role in a 40-member University of Adelaide team winning US$75,000 in the NASA Space Robotics Challenge.

Of the 114 international teams that entered the challenge, only 22 advanced to the final stage, with Team Adelaide finishing in third place and receiving one of only two innovation awards in addition to their cash prize at a livestreamed award ceremony at NASA’s Space Center in Houston, Texas, last week. The University’s core team comprised primarily of honours, masters and PhD students.

The NASA challenge saw teams compete to develop computer code to control virtual space ‘robots’ working across a simulated lunar landscape.

Professor Tat-Jun Chin, AIML’s Director of Machine Learning for Space, said it was not a simple problem to solve.

“We were provided with a simulated moon environment, and we had to design algorithms to control a robot to move around on that surface,” Professor Chin said.

Student Ravi Hammond is undertaking his masters degree in artificial intelligence and was a team lead on the project.

“NASA set competitors the task of using simulations to program a team of six autonomous robots to find, extract and haul resources from a lunar environment,” Ravi said.

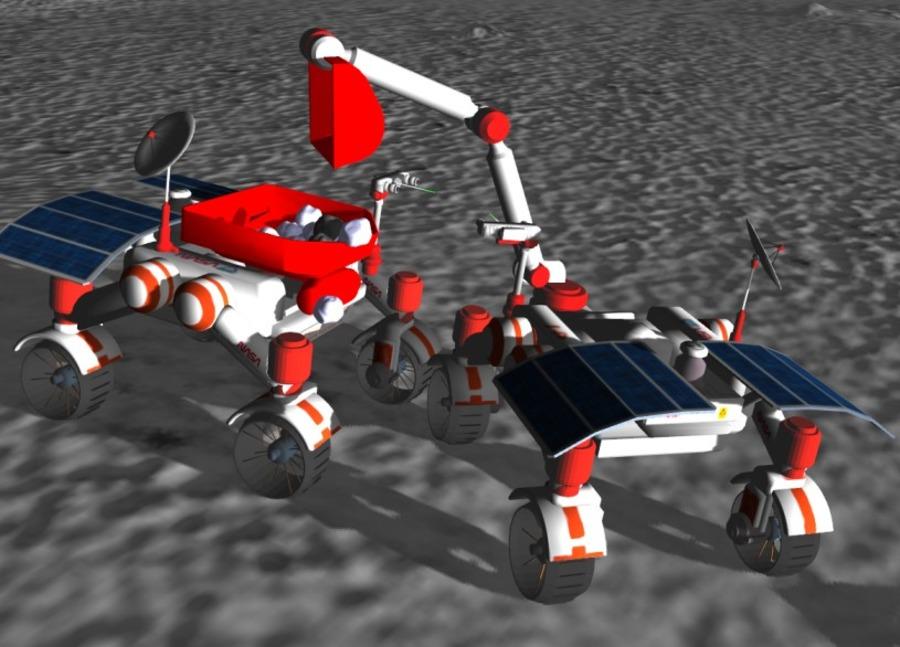

One of Team Adelaide's scout rovers navigating obstacles in NASA's virtual lunar landscape.

Communicating with a human space exploration crew is relatively simple — give instructions, send the astronauts out and wait for them to report back with what they find.

But for robots, it’s more complex. For one thing, they don’t understand plain written or spoken instructions. They also don’t have eyes to view the environment, and if they do have cameras they don’t have a brain to interpret the visual information and make decisions based on what they see.

Working with their team mates, Ravi and another project lead Ragav Sachdeva, used the simulated environment to set up two groups of autonomous robots. Here, the term ‘autonomous’ refers to the capability of each robot to carry out its tasks according to directions provided by on-board coding, without the need for further instructions given in real time.

“We set up a scout, a hauler and an excavator robot in each team,” Ragav explained.

“Each robot relied on robotic vision to facilitate navigation, avoid obstacles, interact with each other and for resetting any operational errors.”

AIML is ranked in the top three of global institutions for computer vision research, and publishes high impact research on machine learning and artificial intelligence. These capabilities were successfully applied in the NASA challenge.

“Our Space Robotics Challenge program begins by initialising the locations of the rovers and the base stations,” Ravi said.

“Then the scouts use a spiral search pattern to find resources scattered throughout the map.”

Ravi explained that once a resource is found, the scouts sits on top of it to act as a visual marker. The excavator and the hauler then initiate a rendezvous procedure. Once this has taken place, the scout can leave the area to find more resources.

“Then the excavator and the hauler dig up the resources and bring them back to base,” Ravi said.

An excavator robot digs virtual resources and loads them into a hauler robot. Each robot was able to carry out its tasks without the need for further instructions given in real time.

Throughout the whole operation, the rovers avoid obstacles using an object detection and depth estimation capability that has also been programmed by the team members.

With their winning entry, Ravi, Ragav and other team members scored on average about 266 points in a two-hour simulation run, which is an indicator of how many resources were collected during that time. Over the course of 44 simulation hours in which their robots travelled about 120kms, they observed no navigational or equipment failures.

“Our team… which was the only one from Australia to participate in the NASA Space Robotics Challenge, competed against teams from the world’s top universities, as well as corporate and private groups,” said Associate Professor John Culton, Director of the Andy Thomas Centre for Space Resources at the University of Adelaide.

NASA recognises the important role that autonomous systems might play in taking care of some of the necessary but monotonous tasks expected in future human spacefaring expeditions.

“Autonomous robotic systems like those developed for this challenge could assist future astronauts during long-duration surface missions, allowing humans to focus on the more meticulous areas of exploration,” said Monsi Roman, program manager for NASA’s Centennial Challenges.

Team Adelaide’s NASA achievement speaks to the international capability of Australia’s growing space technology sector. AIML is located at Lot Fourteen—South Australia’s innovation precinct—which is also home to the Australian Space Agency, Australian Space Discovery Centre and several private space and telecommunications companies.

Team leads Ravi Hammond and Ragav Sachdeva discuss the team's award winning approach to the NASA Space Robotics Challenge.