2022 soccer World Cup winners and losers

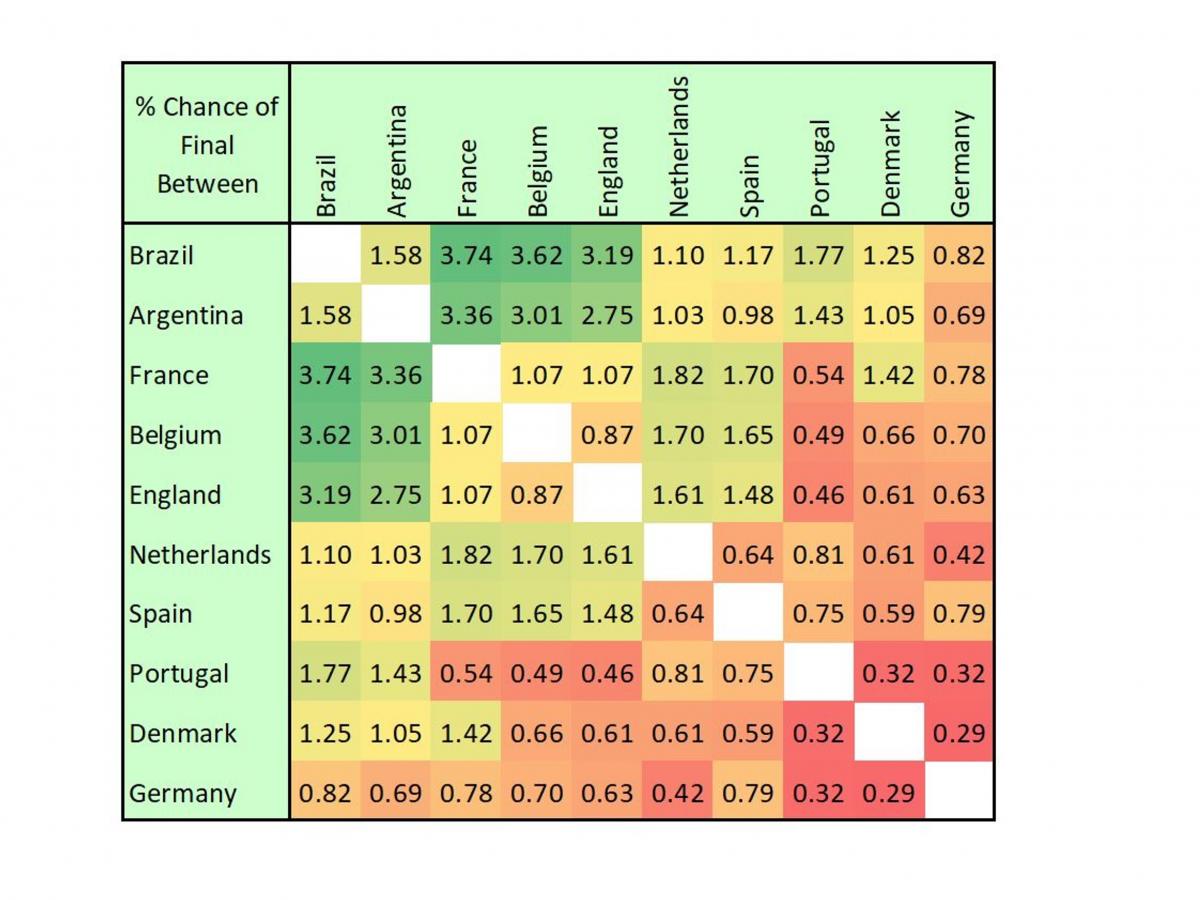

Probability of a final between the top ten teams most likely to win the 2022 FIFA World Cup.

Simulation modelling shows that the FIFA World Cup which kicks off on 21 November in Qatar, is most likely to be won either by Brazil or Argentina.

The University of Adelaide’s Emeritus Professor Steve Begg is Professor of Decision-making and Risk Analysis at the Australian School of Petroleum and Energy Resources.

“While Brazil and Argentina are the most likely winners of the World Cup, a final between them is relatively unlikely (1.6%),” he says.

“The most likely final is between Brazil and France (3.7%), followed by Belgium vs Brazil (3.6%) and Argentina vs France (3.4%). The Socceroos have a 16.9% chance of making Round of 16 but only have a slim chance of success.

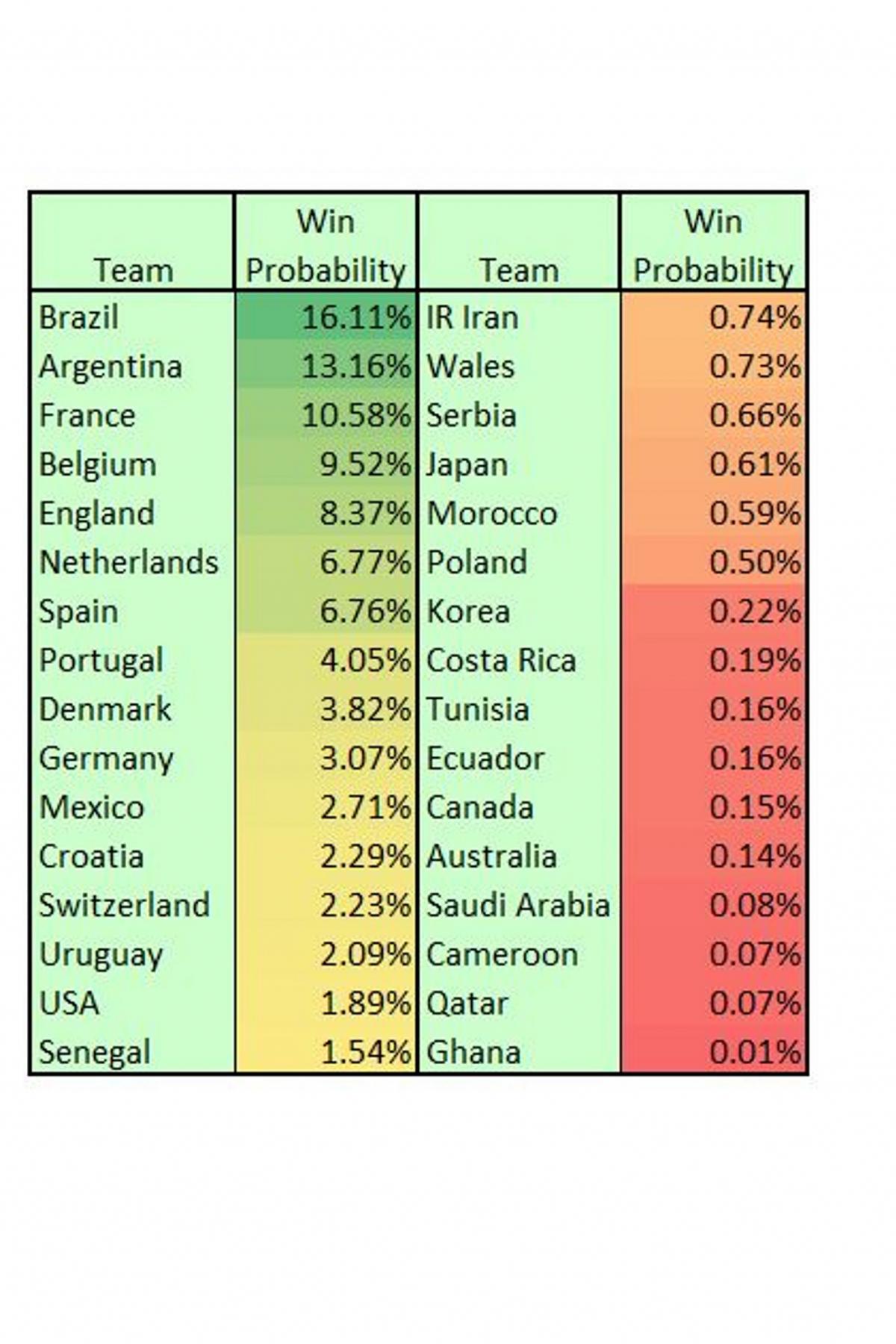

“Belgium, despite being the second best team in the world according to FIFA, has only a 9.5% chance of winning the 2022 FIFA World Cup Qatar. Australia has a 17% chance of getting to the Round of 16, but only 0.14% chance of winning. England has an 8.4% chance of winning.”

Decision-making under uncertainty, risk assessment and the psychological and judgmental factors that impact them are at the heart of Emeritus Professor Begg’s teaching and research.

“For the FIFA World Cup I have developed a ‘Monte Carlo simulation’ of the competition, based on team rankings with other input including recent form,” he says.

The Monte Carlo technique was developed in World War II by scientists working on the Manhattan Project – the development of the atomic bomb.

Caption Probability of winning the 2022 FIFA World Cup for each team (prior to the start of the tournament).

“The key idea is that rather than trying to work out every possible outcome of a complex system, enough possibilities are modelled to be able to estimate the chance of any particular outcome occurring,” says Professor Begg.

“The outcomes of many decisions we make are uncertain because of things outside of our control.

“Modelling uncertainty in the World Cup shows the many ways the whole tournament might play out. What makes it so hard to predict is not just uncertainty in how a team will perform in general, but random factors that can occur in each match and the complexity of the tournament rules.

“Humans have not evolved an intuitive ability to accurately assess the probabilities of outcomes from complex, uncertain systems, such as the World Cup. Lots of research shows we are really bad at it. The complexity and uncertainty often lead to non-intuitive outcomes so we need models to help us.

“The odds offered by sports betting companies are not based on actual probabilities, but on the number of people betting on an outcome, which is in turn based on their intuitive judgements or desired outcomes, so not necessarily a good reflection of the real probability.”

“Modelling uncertainty in the World Cup shows the many ways the whole tournament might play out. What makes it so hard to predict is not just uncertainty in how a team will perform in general, but random factors that can occur in each match and the complexity of the tournament rules."The University of Adelaide’s Emeritus Professor Steve Begg, Professor of Decision-making and Risk Analysis at the Australian School of Petroleum and Energy Resources.

In the simulation, Professor Begg has calculated 500,000 possible ways the whole tournament could play out. Although there are many possible options – about 110 billion unique ways that just the Group stage could play out – this is “more than enough” for a good assessment of the probability of how far each team will progress.

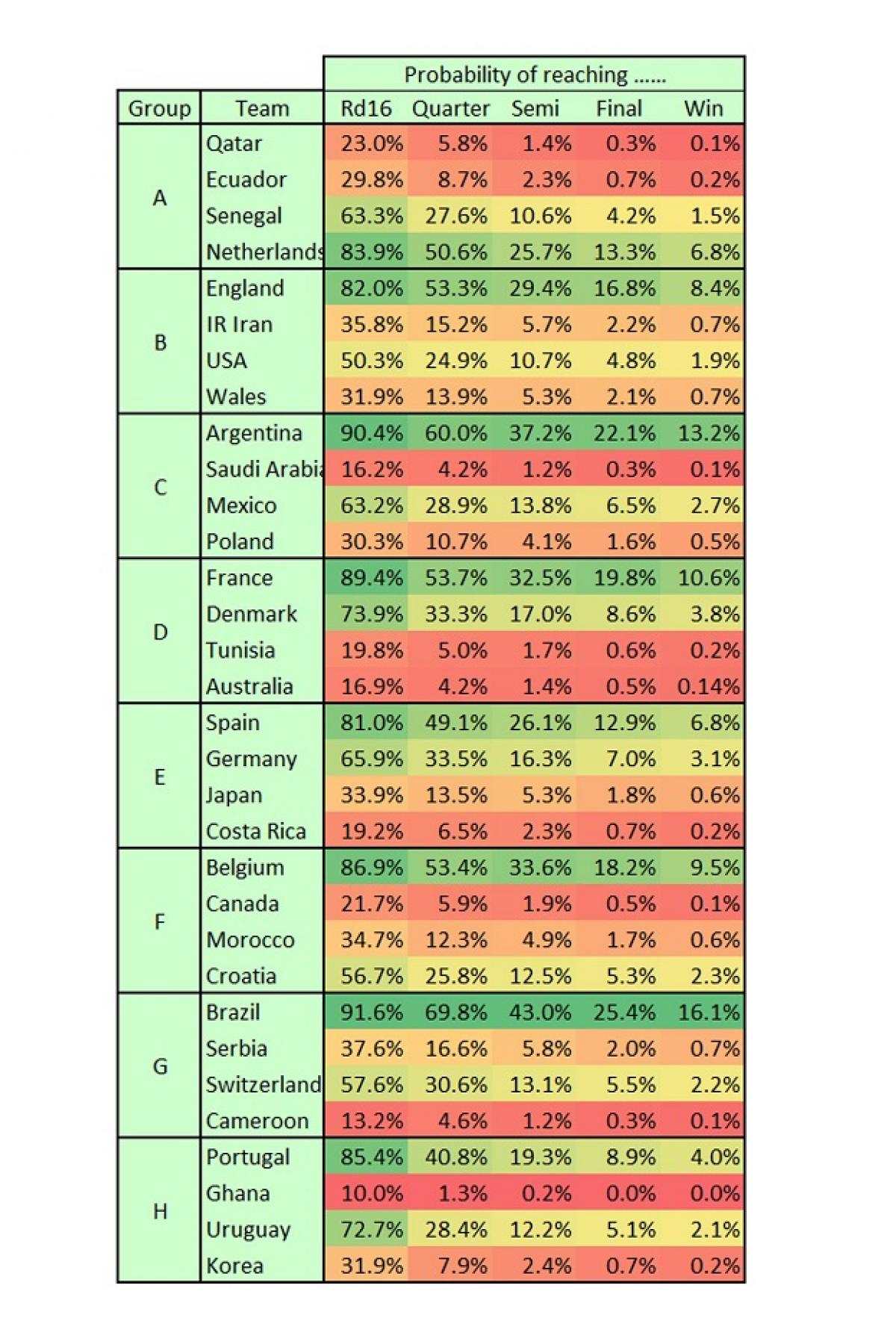

Probability of position at each stage of the 2022 FIFA World Cup (prior to the start of the tournament).

In Professor Begg’s model, two key uncertainties are a team’s “tournament form” (their general level of performance over the course of the competition) and a team’s “match form” (the extent to which the team plays better or worse than its tournament form in a specific match). The score for a game is based on the relative match form of the two teams and likelihood of possible score combinations based on the last six World Cups.

The uncertainty in each team’s “tournament form” is based on FIFA rankings over the past four years, modified by Professor Begg’s knowledge of the game and the team’s recent performance. Poorer teams have a relatively greater chance of performing above their current level whereas the better teams have a relatively greater chance of under-performing – so there is the possibility of some “giant killing”.

“Probability is subjective, it depends on what you know,” says Professor Begg. “You don’t need data of past outcomes of exactly the same ‘event’; you use what information you have to assign a degree of belief in what might happen, and thus make decisions or, in this case, judgements of probabilities. The crucial thing is that your information and reasoning is not biased.”

Professor Begg has calculated that Australia’s national team, the Socceroos, has a 17% chance of advancing through the group stage, 4.2% of making the quarters, 1.4% of the semis, 0.45% of being in the final, and 0.14% chance of being crowned as champions.

“To make good decisions, it is really important to base beliefs on evidence and reason, not on gut-feel or what you would like to be true, like the Socceroos becoming World Cup champions,” he said.

The accuracy of the model has been checked against the typical number of wins, draws, goals per match and total goals in previous World Cup tournaments.

But the real test is how does it stand up to actual outcomes? “To assess the accuracy of probabilities you cannot draw conclusions from a single outcome or observation. You have to pick a probability, say 70%, and observe how frequently events that have that probability actually occur,” says Professor Begg.

“If they occur about 70% of the time the probability assessment is accurate. If they occur 90% of the time then the assessment is underconfident. If they occur 50% of the time it is overconfident, which is the typical when people, including experts, make these kind of judgements intuitively.”

This process is repeated for a range of probabilities from 0 to 100%. If the frequencies of actual outcomes are close to the probabilities the model is accurate, or well-calibrated.

Professor Begg has carried out this process by comparing the outcomes of 2018 World Cup and 2020 Euros with his model probabilities.

The results show his modelling is very well-calibrated when compared to popular media predictors like ‘Paul the Octopus’ and ‘Achilles the Cat’ of previous tournaments.

Media contacts:

Emeritus Professor Steve Begg, University of Adelaide.

Mobile: +61 (0)422 008 984, Email: steve.begg@adelaide.edu.au

Crispin Savage, Manager, Media and News, University of Adelaide.

Mobile: +61 (0)481 912 465, Email: crispin.savage@adelaide.edu.au