Increasing marking efficiency using artificial intelligence (AI)

The use of Gradescope’s AI functionality can support and accelerate marking and grading in particular assessment contexts.

In 2021 Gradescope was successfully piloted by Dr Cheryl Pope in Computer Science courses with a focus on automated marking of student program code and code similarity checking. The pilot identified the tool also enables educators to automatically group similar answers and award marks to all in the group. Feedback that is common to all answers in a group can be written once and applied to all.

"Gradescope made tracking exam marking progress across a large class much easier and more accurate. By not having to coordinate sharing papers across multiple markers and being able to see how each marker was progressing, I could quickly and easily allocate marking, which allowed us to turn around exam results in about 1/2 the time it normally took. The grouping feature allowed us to eliminate all blank answers from the marking with a single grade entry. The rubric feature provided students with a clear indication of strengths/weaknesses without adding to marking time (normally we could not provide feedback on exams due to time constraints). The requests for remarks and errors due to missed questions during marking also reduced." - Dr Cheryl Pope

A pilot extension is now underway in selected science courses to evaluate potential marking efficiencies for hand-drawn diagram and symbol-based assignments. The same advantages of locating student answers in a document and grouping similar responses to enhance marking and feedback processes apply, and Gradescope also provides a dynamic rubric system that enables multiple marking schemes and automated feedback features.

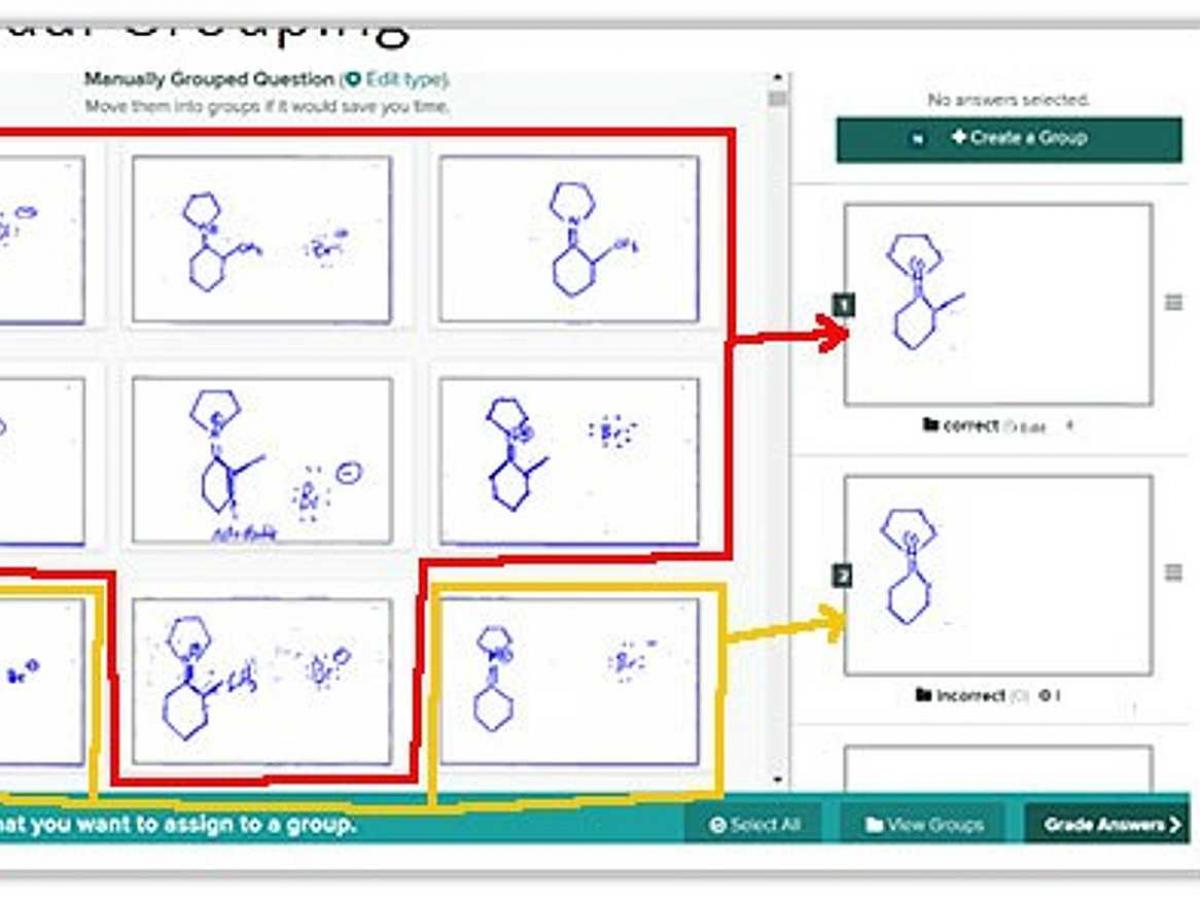

A screen shot of Gradescope being used for grouping similar answers in a chemistry assignment

In chemistry assignments for example, Gradescope can automatically group similar illustrative answers as shown here.

Responses that don’t meet the threshold of similarity are manually assigned. For scenarios where students may commonly have misunderstanding, the incorrect answers are automatically grouped and rich consistent feedback can be applied to them all.

Of course, if some answers were incorrectly grouped, the marker can manually can click and drag any answers to a relevant group. Rubrics can be created that can become dynamic and applied as groups of answers reveal unique feedback requirements.

Story by Kym Schutz

Educational Technologist, Learning Enhancement and Innovation (LEI).

In the 2022 Gradescope pilot, we are seeking to explore and evaluate the additional grouping and artificial intelligence features that might increase grading efficiency in science courses.

If you currently coordinate a science course and are interested to learn more about this tool, contact Kym Schutz, Educational Technologist, Learning Enhancement and Innovation, for more information.