A very short history of GPTs before ChatGPT

For many of us, artificial intelligence (AI) seemed to arrive with ChatGPT. But the field of AI is hardly new—the term itself is almost 70 years old—and the core technology that powers the generative AI software we use today is something University of Adelaide staff and students have been working with well before ChatGPT burst onto the scene.

Most AI scientists trace ChatGPT’s origin to a landmark 2017 paper by eight researchers at Google titled ‘Attention is All You Need’ (Vaswani et al., 2017), which proposed a novel AI architecture called the transformer. A key aspect of their approach was something called self-attention — a mechanism where the AI model weighs the importance of each word in a sentence, allowing it to capture context and dependency, with the transformer calculating these weights simultaneously and at rapid speed. For those of us who aren’t computer scientists, Wired’s ‘8 Google Employees Invented Modern AI. Here’s the Inside Story’ is a fascinating read (Levy, 2017).

The transformer didn’t immediately spark an AI revolution. Google didn’t immediately see its potential, but a small startup named OpenAI, did.

Most people know about OpenAI from their first version of ChatGPT, which is based on an AI model called GPT-3.5 and released in November 2022. While the chatbot interface is what made ChatGPT immensely popular, OpenAI’s first model (GPT-1) was published in June 2018 and created great excitement among AI researchers, engineers and students for its ability to generate human-like written language.

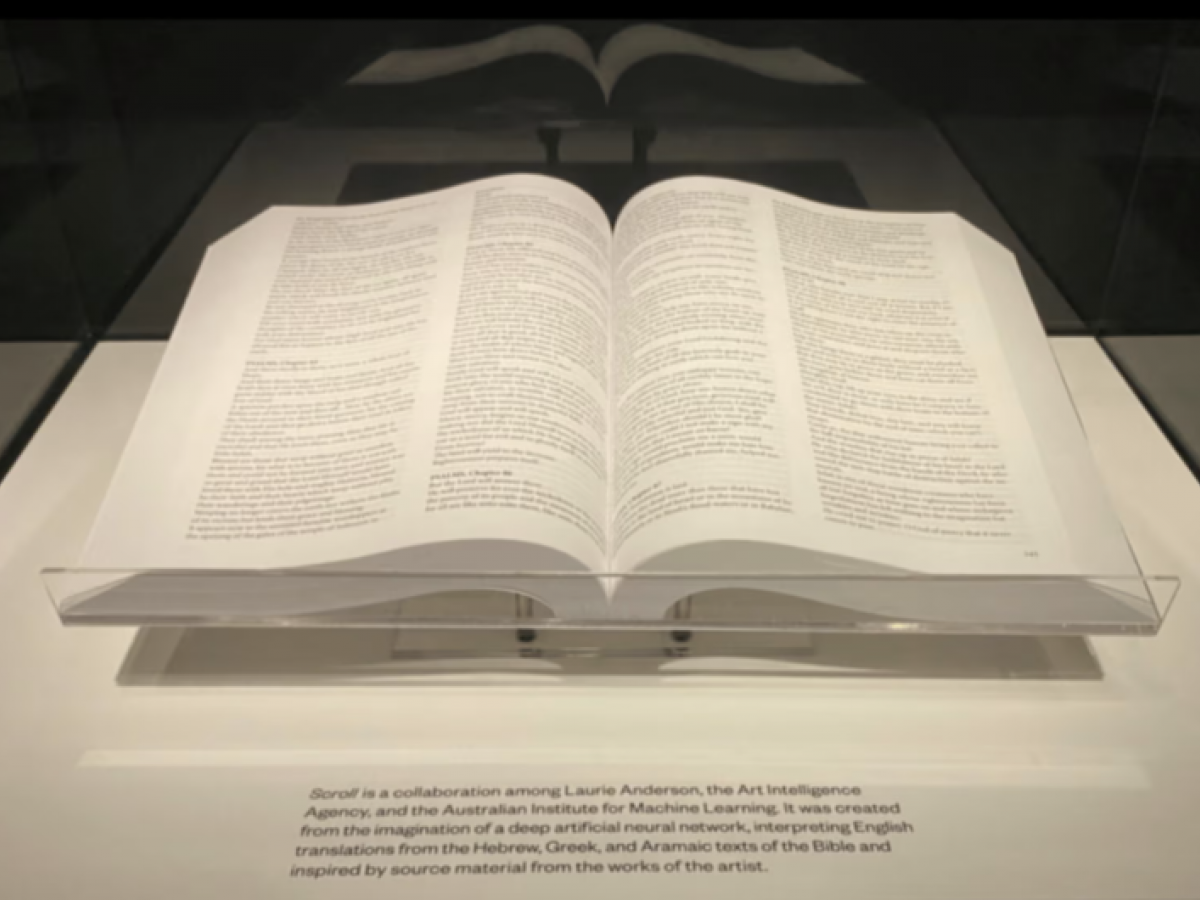

Here at the University, researchers at the Australian Institute for Machine Learning (AIML) have used GPT models creatively. In early 2020, in partnership with the University’s Sia Furler Institute, the institute hosted its first artist-in-residence: American avant-garde creative pioneer Laurie Anderson wanted to explore the artistic possibilities of then-new AI language models.

Her AI-enabled artworks were later exhibited at the Smithsonian and include a bible written in Anderson’s ‘voice’ using a GPT model trained on religious texts and her own writings. AIML also built an AI chatbot trained on the lyrics, writings, and interviews of her late husband, musician Lou Reed. These projects may seem typical of the global AI hype we’re used to today, but were prescient and cutting-edge at the time.

As AI continues to develop, its rapid pace may seem intimidating. The University of Adelaide is playing a crucial role, locally and globally, in building future technology and skills. Whether through innovative approaches to teaching in a digital world, or leading research forging new pathways of discovery, there’s no better place to explore AI than the dynamic environment of the university campus.

Article by Eddie Major - Coordinator for Artificial Intelligence in Learning and Teaching